We’re excited to announce a new plugin for our Talk platform: Toxic Comments.

If you choose to enable this plugin with Talk, it will pre-screen comments for toxic tone or language, and give commenters an opportunity to change their wording before they post a contribution.

Here’s what it does (and please excuse our language while we demonstrate):

![[ANIMATED IMAGE] A comment box where someone types a swearword and the system posts a message saying that the comment may breach the guidelines, and that the commenter has one chance to re-edit or submit anyway.](https://coralproject.net/wp-content/uploads/2017/10/toxic1.gif)

Someone writes a comment. If the system thinks that the language might be toxic, the commenter is shown a warning.

They can choose to change their writing or post it anyway. In order to make the system harder to game, commenters only get one chance per comment to rethink their wording before it is submitted.

![[ANIMATED IMAGE] A comment box where someone places periods inside a swear word to try and trick the system. A notification says that a moderator will review the comment as it might break the guidelines.](https://coralproject.net/wp-content/uploads/2017/10/toxic2.gif)

If the system still thinks that the language is toxic, the comment will be held for review by a human moderator, who will make the final decision on whether or not a comment is rejected. Published comments can still be reported by other users as usual.

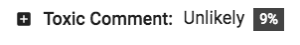

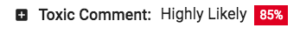

Every comment also receives a ‘likely to be toxic’ score, visible only to moderators. This will help community moderators set the right reporting threshold for their site.

How it works

The likely toxicity of a comment is evaluated using scores generated from Perspective API. This is part of the Conversation AI research effort run by Jigsaw (a section of Google that works on global problems around speech and access to information).

Perspective API uses machine learning, based on existing databases of accepted/rejected comments, to guess the probability that a comment is abusive and/or toxic. It is currently English only, but the system is designed to work with multiple languages.

In order to activate our plugin, each news organization applies for an API key from Jigsaw (click “Request API access” on this site.) Sites can also work with Jigsaw to create an individualized data set specifically trained on their own comment history.

Perspective API was released earlier this year, and is currently in alpha (meaning that it is being continually refined and improved.) Jigsaw should certainly be praised for devoting serious resources to this issue, and making their work available for others, including us, to use.

We’ve talked with their team on several occasions, and have been impressed by their dedication and commitment to this issue. These are smart people who are trying to improve a broken part of the internet.

Responses to Perspective API

So far, Perspective API has received a mixed response. The first release, while intended to be an early test version of their approach, seemed to have several serious deficits. Experiments by the interaction designer Caroline Sinders (who has also done work with us) suggested that it was missing some key areas of focus, while Violet Blue, writing for Engadget, used Jessamyn West’s investigation among others to show that the system was returning some truly troubling false positives. For their part, Jigsaw says it is aware of these issues, and recently wrote a blogpost about the limitations of models such as theirs.

If the system were intended to make moderation decisions without human intervention, we believe that Perspective API would be a long way from being ready for us to implement in Talk. But according to its creators, the goal for Perspective API has never been to replace human moderators.

Instead, it is intended to be a way to use computing power to make moderators’ jobs faster and easier by quickly identifying those comments that clearly need immediate attention. It’s a machine for harvesting some of the lower-hanging fruit. And in that, it seems to do a useful job.

That’s why we’ve chosen this kind of implementation in our plugin: we intend for it to help commenters improve their behavior, and also to pull what are potentially the worst comments for review. We’re trying out different models as we implement it, to reduce the number of false positives.

Improvements

We’ve noticed that many of the early issues with Perspective API already seem to have improved – the phrases that Jessamyn West tested now get higher toxic probability scores, and the methods of evading higher toxicity probabilities detailed in this academic paper from the University of Washington no longer seem to work.

While Jigsaw’s system is continually learning and improving, we don’t expect it to catch every unpleasant comment. It is not a failure of our system if something unpleasant gets through – language is tricky, and each toxic comment that the plugin holds for review is already improving the space.

This is just one of several tools (including Suspect words, Banned words, suspensions, user history, flagging karma) that Talk contains to detect poor or unhelpful commenter behavior.

We’re going to keep following Jigsaw’s work in this area, and depending on the success of this plugin, may look into other ways to integrate this work. We will, however, always rely on human judgement to make the final call. We believe in machine-assisted, not machine-run community spaces.

If you have any thoughts about Perspective API and how we’re implementing this feature, please leave them below. (And no, we haven’t activated it below, so please don’t try to test it here.)

Toxic Avenger photo by Doug Kline, CC-BY 2.0

We’re excited to announce a new plugin for our Talk platform: Toxic Comments.

If you choose to enable this plugin with Talk, it will pre-screen comments for toxic tone or language, and give commenters an opportunity to change their wording before they post a contribution.

Here’s what it does (and please excuse our language while we demonstrate):

Someone writes a comment. If the system thinks that the language might be toxic, the commenter is shown a warning.

They can choose to change their writing or post it anyway. In order to make the system harder to game, commenters only get one chance per comment to rethink their wording before it is submitted.

If the system still thinks that the language is toxic, the comment will be held for review by a human moderator, who will make the final decision on whether or not a comment is rejected. Published comments can still be reported by other users as usual.

Every comment also receives a ‘likely to be toxic’ score, visible only to moderators. This will help community moderators set the right reporting threshold for their site.

How it works

The likely toxicity of a comment is evaluated using scores generated from Perspective API. This is part of the Conversation AI research effort run by Jigsaw (a section of Google that works on global problems around speech and access to information).

Perspective API uses machine learning, based on existing databases of accepted/rejected comments, to guess the probability that a comment is abusive and/or toxic. It is currently English only, but the system is designed to work with multiple languages.

In order to activate our plugin, each news organization applies for an API key from Jigsaw (click “Request API access” on this site.) Sites can also work with Jigsaw to create an individualized data set specifically trained on their own comment history.

Perspective API was released earlier this year, and is currently in alpha (meaning that it is being continually refined and improved.) Jigsaw should certainly be praised for devoting serious resources to this issue, and making their work available for others, including us, to use.

We’ve talked with their team on several occasions, and have been impressed by their dedication and commitment to this issue. These are smart people who are trying to improve a broken part of the internet.

Responses to Perspective API

So far, Perspective API has received a mixed response. The first release, while intended to be an early test version of their approach, seemed to have several serious deficits. Experiments by the interaction designer Caroline Sinders (who has also done work with us) suggested that it was missing some key areas of focus, while Violet Blue, writing for Engadget, used Jessamyn West’s investigation among others to show that the system was returning some truly troubling false positives. For their part, Jigsaw says it is aware of these issues, and recently wrote a blogpost about the limitations of models such as theirs.

If the system were intended to make moderation decisions without human intervention, we believe that Perspective API would be a long way from being ready for us to implement in Talk. But according to its creators, the goal for Perspective API has never been to replace human moderators.

Instead, it is intended to be a way to use computing power to make moderators’ jobs faster and easier by quickly identifying those comments that clearly need immediate attention. It’s a machine for harvesting some of the lower-hanging fruit. And in that, it seems to do a useful job.

That’s why we’ve chosen this kind of implementation in our plugin: we intend for it to help commenters improve their behavior, and also to pull what are potentially the worst comments for review. We’re trying out different models as we implement it, to reduce the number of false positives.

Improvements

We’ve noticed that many of the early issues with Perspective API already seem to have improved – the phrases that Jessamyn West tested now get higher toxic probability scores, and the methods of evading higher toxicity probabilities detailed in this academic paper from the University of Washington no longer seem to work.

While Jigsaw’s system is continually learning and improving, we don’t expect it to catch every unpleasant comment. It is not a failure of our system if something unpleasant gets through – language is tricky, and each toxic comment that the plugin holds for review is already improving the space.

This is just one of several tools (including Suspect words, Banned words, suspensions, user history, flagging karma) that Talk contains to detect poor or unhelpful commenter behavior.

We’re going to keep following Jigsaw’s work in this area, and depending on the success of this plugin, may look into other ways to integrate this work. We will, however, always rely on human judgement to make the final call. We believe in machine-assisted, not machine-run community spaces.

If you have any thoughts about Perspective API and how we’re implementing this feature, please leave them below. (And no, we haven’t activated it below, so please don’t try to test it here.)

Toxic Avenger photo by Doug Kline, CC-BY 2.0